In the first two parts of this trilogy, we defined Data Quality dimensions and explored the root causes of "dirty data" in the supply chain. We concluded that a holistic approach must address people, processes, and systems. In this article, we will do a deep dive on how AI is creating new opportunities to improve data quality.

1. Moving beyond "Garbage In, Garbage Out"

The conventional wisdom in enterprise data management is that unless your data is in order you cannot really benefit from AI. And this is true if you think about AI in the context of downstream activities such as extracting insights.

But AI can also be used to improve data quality, perhaps in ways that you have not thought before. You don't need to wait with using AI because your data is not good, rather you can use AI proactively to improve your data quality!

Data scientists have used predictive AI (machine learning) in data quality improvement efforts for well over a decade now. The conservative world of operational systems may have been slow in applying these techniques but they do exist.

Gen AI brings a new set of possibilities in improving data quality such as automating data entry or making quality assessments way easier than was possible before. Let us understand how AI could be applied to improve data quality across the entire value chain.

2. The Power of the MDM "Golden Record"

In the second article of the trilogy, we have already dealt with root causes of poor data quality in two distinct stages of the data value chain: data creation in operational systems, and data integration in analytical systems.

We also introduced master data management as an important piece in the puzzle, that we now need to deal with explicitly. Thus, we will talk about three systems:

- 1. Operational Systems (SOE): The System of Entry (e.g., ERP, CRM). They prioritize transaction speed and local workflow efficiency.

- 2. Master Data Management (MDM): The System of Record (SOR) and governance hub. This platform creates the Golden Record—the single, best version of core entities (Customer, Product, Supplier).

- 3. Data & Analytics (D&A): The System of Insight (Data Warehouse, Data Lake). These systems prioritize data integration, completeness, and fitness for analytical purposes.

Crucially, in this article, we make the assumption that MDM acts strictly as the System of Record (SOR). It dictates the enterprise standard and cleans data after creation, but the SOE remains the point of entry and local enforcement.

3. Practical Application: MDM's Golden Record and Key Entities

The core function of MDM is to create an enterprise-grade entity, often through enrichment. MDM consolidates data from different operational systems and adds attributes essential for risk, compliance, and strategic sales. See some examples from commercial, procurement and production domains below:

| Key Entity Example | Primary Operational System (SOE) | MDM's Core Contribution (The Golden Record) | Unique Enrichment Attributes Added by MDM |

|---|---|---|---|

| Customer | CRM | A unified 360-Degree View, merging records across sales, service, and billing systems. | External IDs (D&B ID, LEI), Compliance Status (AML/Sanction lists), Canonical Address. |

| Supplier | Procurement | A single, trusted record for contracts, payments, and risk assessment across multiple systems. | Risk Data (Credit ratings, adverse media flags), Tax ID validation status. |

| Product | PLM/ERP | A consolidated list of products/SKUs, linking engineering data to marketing content. | Regulatory Compliance (CE/RoHS status, often extracted from documents via NLP). |

Using the commercial domain as the example, here MDM ensures that there is just one correct and complete customer record that can be relied upon by all operational systems that deal with order booking, goods shipping, billing and after sales service. Without a central controlling system such as MDM, it is very well possible that each system captures customer details in slightly different ways that can give rise to confusion on whether the right entity is being dealt with across all activities.

4. What system owns which data quality task?

Each of the operational, MDM and analytical systems have separate data quality roles as outlined in the table below. Broadly speaking, local data quality integrity is the job of operational system, and global integrity that of the MDM system. The analytical system may play a supporting role in improving data quality in these two systems and of course improves data quality downstream localized to analytical needs.

| Data Value Chain Phase | Operational Systems (SOE/CRM/ERP) Role | MDM System (System of Record - SOR) Role | D&A Systems (Warehouse/Lake) Role |

|---|---|---|---|

| 1. Creation/Entry | Local Enforcement: Captures raw data. Enforces mandatory fields, format checks, and application-level business rules. Correction During Approval: Business users manually correct data flaws during internal approval workflows. | Standard Definition: Defines the canonical model, data standards, and controlled Reference Data lists used by all systems. | None. |

| 2. Acquisition/Ingestion | Data Export: Selects and pushes relevant master data records to the MDM hub (or directly to D&A if no MDM). Local Integrity Checks: Performs referential integrity checks before passing data. | Gateway Check: Performs initial completeness and conformity profiling against enterprise standards in the staging layer. Flags records for mastering. | Observability: Performs pipeline-level DQ checks (volume, freshness, schema drift). Ingests raw data for further enterprise profiling. |

| 3. Mastering/Stewardship | Update Consumption: Receives the clean Golden Record from MDM and updates its local record. Obsolescence Mgmt: Automatically manages local status changes and archival/deletion triggers. | Core DQ Function: Deduplication, Survivorship, Standardization, and Enrichment. Creates and governs the single, trusted Golden Record. | Enterprise Profiling & Stewardship Support: Performs cross-system profiling on large datasets. Provides the central UI for stewards to visualize data quality and MDM exceptions. |

| 4. Analysis/Consumption | Operational Reporting: Generates transaction-focused, local reports and tracks basic, local DQ metrics. | Distribution: Publishes the clean Golden Record (as a dimension) to D&A for analytical use. | Modeling & Quality Improvement: Performs last-mile wrangling (cleaning, imputation, feature engineering) specific to ML models. Impact Measurement: Correlates DQ issues with business outcomes and performs final BI reporting. |

5. Deep Dive: AI's Role in the Data Value Chain

Data quality improvements traditionally rely on rule checks and enforcements and a limited AI-enhancements. In the future though, one can expect more AI enhancements directed towards three benefit areas:

- • Convenience (GenAI): Shifting from manual "data entry" to "data curation."

- • Sophistication (Predictive AI): Finding "invisible" errors or duplicates across millions of rows.

- • Automation (Self-Healing): Moving from reactive cleaning to proactive prevention.

AI Data quality

opportunities in

Operational

Systems (SOE)

Focus: Efficiency and

Prevention

| Phase | Mix of Rules & Basic AI | Advanced AI (Sophisticated/Autonomous) | DQ Dimension |

|---|---|---|---|

| 1. Entry | Smart Pick-lists: Rules suggest values based on the previous field. | GenAI Auto-Fill: Extracts data from an email or invoice to populate the form. | Completeness / Accuracy |

| 2. Ingestion | Format Enforcement: Rules ensure dates and IDs follow a fixed pattern. | Semantic Parsing: AI spots "logic" errors (e.g., a "kg" value for a liquid product). | Consistency / Validity |

| 3. Stewardship | Local Duplicate Check: Flags if an identical name exists in the local DB. | Contextual Matcher: Identifies "hidden" duplicates with different names/IDs. | Uniqueness |

| 4. Analysis | Field Health Score: Reports how many mandatory fields are empty. | Pattern Drift Alerts: AI flags when users start bypassing a standard process. | Integrity |

AI Data quality

opportunities in MDM

Systems

(SOR)

Focus: Automation and

Governance

| Phase | Mix of Rules & Basic AI | Advanced AI (Sophisticated/Autonomous) | DQ Dimension |

|---|---|---|---|

| 1. Entry | Auto-Classification: Basic NLP maps an item to a product family. | Cross-Domain Discovery: AI links a "Customer" record to a "Supplier" legal entity. | Accuracy / Consistency |

| 2. Ingestion | Schema Mapping: Suggests which source fields go into the "Golden" template. | Adaptive Trust: AI lowers the trust score of a source system if its error rate rises. | Reliability |

| 3. Stewardship | Probabilistic Match: Scores records based on name/address similarity. | Autonomous Resolution: AI merges high-confidence matches with zero human touch. | Uniqueness / Validity |

| 4. Analysis | Error Heatmaps: Shows which regions/systems produce the most "junk." | Root Cause Predictor: Flags which source-system rule change will fix the most errors. | Timeliness / Integrity |

AI Data quality

opportunities in Data &

Analytics (D&A)

Focus: Impact and

Observability

| Phase | Mix of Rules & Basic AI | Advanced AI (Sophisticated/Autonomous) | DQ Dimension |

|---|---|---|---|

| 2. Ingestion | Static Volume Check: Flags if a data load is 20% lower than yesterday. | Adaptive Observability: ML learns seasonal trends to stop "false-alarm" alerts. | Timeliness / Validity |

| 3. Stewardship | Rule-Based Imputation: Fills a missing state if the city is "New York." | Contextual Imputation: ML predicts missing values based on complex peer data. | Completeness |

| 4. Analysis | DQ Dashboard: A simple report showing overall data health metrics. | GenAI Fact-Checker: Alerts the user if their BI report is skewed by bad data. | Reliability |

6. Why fixing data in source system is always preferable?

The 1:10:100 Rule is often quoted in the context of Data Quality. According to the rule it costs $1 to prevent an error at the source, $10 to correct further down in the system and, and $100+ to fix it after it's used in consumption. This rule has its origin in product design and defects and in my view does not translate well to Data Quality. While the 10x multiplier may not be grounded in reality, the general direction of increasing costs of data quality as it flows through the system are undisputable.

Data practitioners do recognize this. Whether one uses AI or not to improve data quality, the clean-up at source remains the most effective.

7. How AI makes it cost-efficient to fix data quality at source?

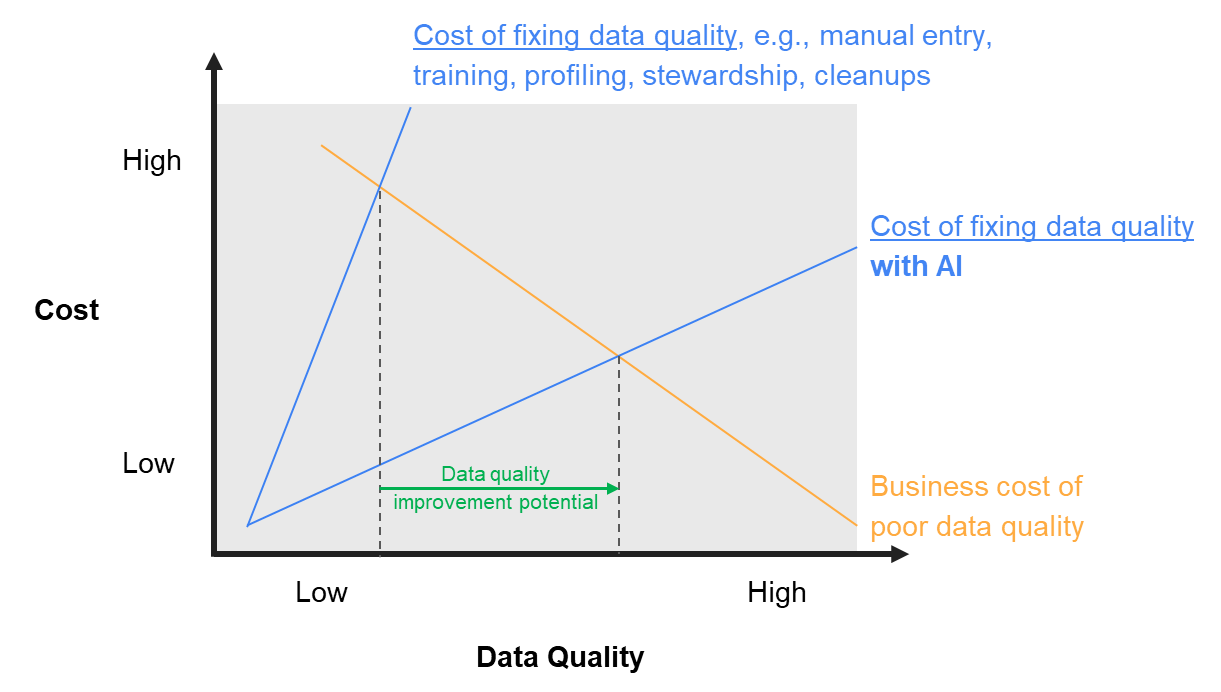

Historically, fixing data at the source was "desirable but impossible." Operational systems have many business users whose priorities and incentives are often misaligned with data quality needs. Furthermore, it is incredibly hard to maintain standards on process, approaches and definitions across a large community of business users, keeping in mind, that the user base always changes as employees flow in and out of certain units and roles.

Thus, although the cost of poor quality is high, the cost of improving quality is also high! This is where the 1:10:100 rule breaks down.

This is where AI really shines by reducing the cost of fixing data quality. Specifically, Gen AI helps us to break through this conundrum by automating entry of data:

- • Consistency and Accuracy: AI uses one set of rules, language and terminology for all entries

- • Efficiency and Timeliness: Data that took hours to enter into databases now takes seconds

- • Completeness: Solves the time-constraints problem of users that prevents them from entering all valuable data

We created Augmend specifically to capitalize on this shift. By deploying cutting-edge AI directly at the point of entry, Augmend supports users with real-time harmonization, enrichment, and validation. If the high cost and complexity of "fixing the source" have deterred your organization in the past, it is time to revisit the strategy. The cost equation has fundamentally changed.