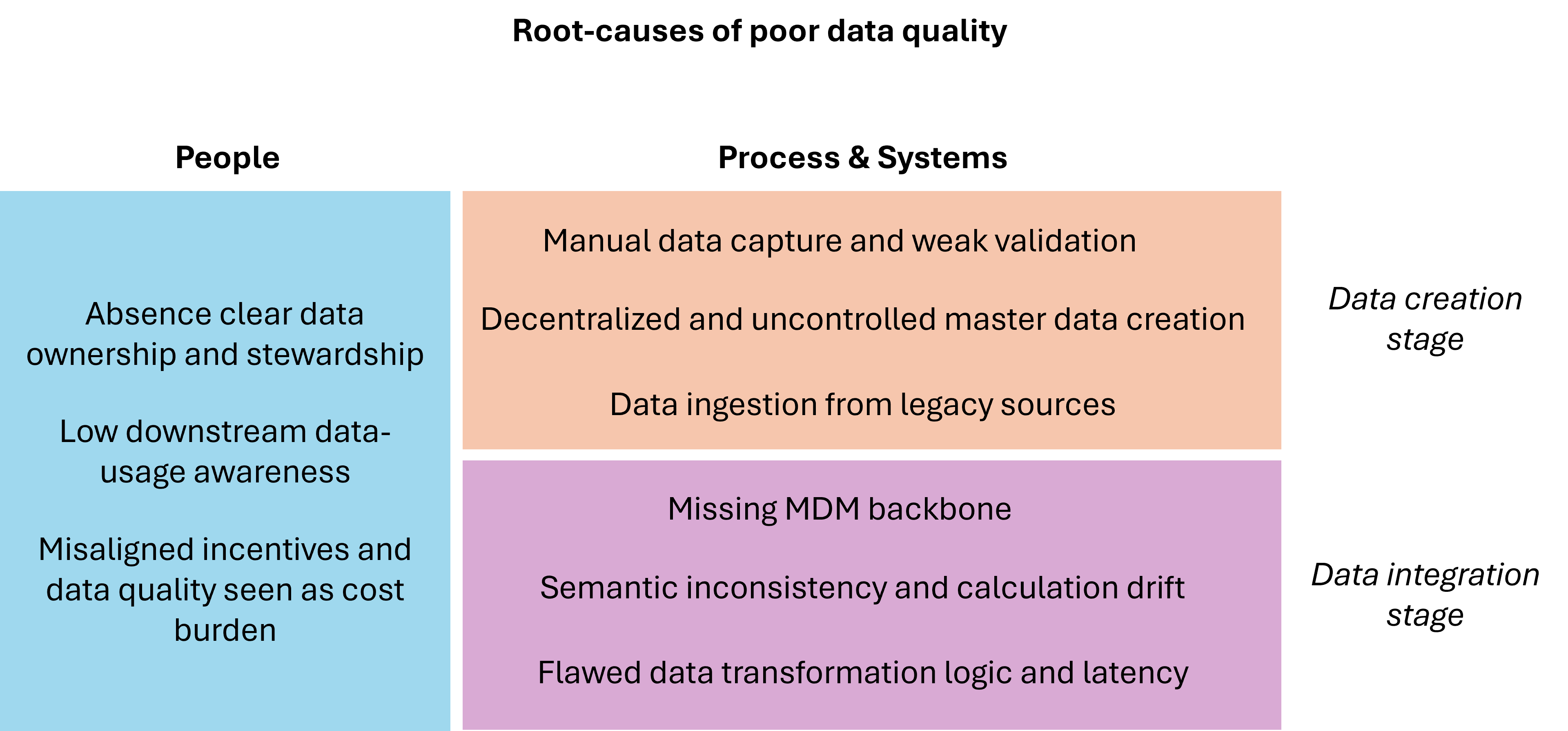

Poor data Quality (DQ) is not a single problem but a complex failure rooted in people, process, and technology aspects across the entire data cycle. Understanding where and why these failures occur is the essential first step in fixing data quality.

I. What are People root causes of poor data quality?

These are the failures in culture, accountability, and standards, valid in both creation and integration stages, that create an environment where bad data is tolerated.

A. Absence of clear data ownership and stewardship

- • Lack of executive sponsorship: DQ initiatives fail because they lack high-level authority to enforce cross-departmental standards and budgets.

- • Undefined data domains: Accountability is vague because critical assets such as Suppliers or Materials have no official Data Owner assigned to them.

- • Poor enforcement: Rules exist on paper, but there is no formal audit or review process to ensure compliance.

B. Low data-usage awareness

- • Lack of training: Frontline users are never trained on the definition or downstream impact of the data they enter (e.g., they don't know a missing material grade stalls a week of production planning).

- • Data siloes: Users only care about the data within their system and have no visibility into how their data entry errors are amplified in global reports.

C. Misaligned incentives and cost-focus

- • Misaligned incentives: Employees are measured on transaction throughput or output quantity, incentivizing speed over accuracy.

- • Cost focus: Data quality is viewed purely as a cost burden rather than an investment in risk reduction and revenue enablement.

II. What are process & systems root causes of poor data quality at data creation stage?

These failures are the direct technical and process gaps at the point of origin, where the error is cheapest to prevent.

A. Reliance on manual data capture and weak validation

- • Manual data entry: Processes driven by human transcription or data entry even when automation is feasible.

- • Missing input controls: Source systems do not sufficiently gate data at entry, i.e., let through all kinds of errors.

B. Decentralized and uncontrolled master data creation

- • Multiple entry points: Allowing different departments to independently create the same core master data record, e.g., procurement, manufacturing and finance able to create a new supplier ID.

- • Lack of master data search: Users are allowed to create a new record without first being forced to search for an existing, similar record, leading to immediate uniqueness failure.

- • Lack of stewardship workflow: The process for creating a new critical master data record is not governed by a workflow that requires review and sign-off from all impacted domains.

C. Data ingestion from unstructured/legacy sources

- • Legacy System Migration Flaws: Flawed data is introduced during system upgrades (e.g., ERP implementation) when bad data from the old system is simply copied over, perpetuating the waste multiplier.

- • Lack of standardized interfaces: Requiring external partners such as suppliers to submit data via unstructured emails or text documents rather than controlled APIs or EDI interfaces.

III. What are the process & systems root causes of poor data quality at the data integration stage?

These failures occur downstream in the pipeline, where the focus shifts from fixing individual records to managing integration, transformation, and semantic consistency.

A. Missing master data management (MDM) backbone

- • Lack of central key resolution: The analytical layer cannot rely on a single, clean key for a critical entity such as supplier or material forcing data scientists to spend time writing complex, brittle code to reconcile IDs.

- • Inconsistent source mapping: The ETL/ELT process attempts to combine 10 sources without an authoritative MDM reference, ensuring the Integration Killer effect.

B. Semantic inconsistency and calculation drift

- • Decentralized report logic: Different BI tools or departments implement conflicting logic for the same KPI (e.g., one department calculates "Fill Rate" differently than another).

- • No central metric catalogue: The business lacks a formal, governed repository for metric definitions, leading to endless arguments about whose report is "correct."

C. Flawed ETL/ELT transformation logic and data latency

- • Missing integrity checks: The transformation pipeline lacks crucial checks for Referential Integrity (e.g., ensuring a transaction links back to a valid Master Data ID) before the data is promoted for consumption.

- • Insufficient audit logging: The pipeline fails to adequately log rejected records or transformation errors, making it impossible to trace the root cause of Conclusion Corruption back to the faulty source record.

- • Stale data/batch processing: The analytical system relies on infrequent batch updates for data needed for near real-time decisions, causing Timeliness failures.

IV. What are root causes of poor accuracy, completeness, consistency, timeliness, validity, uniqueness?

Poor quality data can have one or many root causes. This is true even for a single DQ dimension, as the table illustrates with examples below:

| DQ Dimension | Scenario / Failure Point | Root Cause Category 1: Organizational (Policy/People) | Root Cause Category 2: Operational (Input/Process) | Root Cause Category 3: Analytical (System/Architecture) |

|---|---|---|---|---|

| 1. Accuracy | Inaccurate TCO Metric (The reported total cost is wrong) | Policy Failure: Governance failed to mandate the exchange rate source for TCO calculation, leading analysts to use different rates. | Input Failure: The procurement clerk manually entered an incorrect Unit Price into the Purchase Order, corrupting the source transaction. | System Failure: The ETL logic doubles the cost of the freight component during transformation, corrupting the aggregated TCO metric. |

| 2. Completeness | Missing Customer Contact Info (Cannot follow up on order) | Policy Failure: Governance fails to declare "Secondary Phone Number" as a mandatory field in the customer creation policy. | Process Failure: The user interface for the CRM system has confusing navigation, leading the sales rep to overlook and skip the required "Zip Code" field. | Minimal/N/A: The primary failure is in the collection process; analytical systems can only flag the missing value, not fix it. |

| 3. Consistency | Inconsistent Supplier ID (Reports can't link spend to one vendor) | People Failure: The Data Steward was never trained on the rules for merging vendors and approved the creation of a new ID when one already existed. | Uncontrolled Creation: A buyer bypasses the search and creates a duplicate record for "ACME Corp" because the source system allows duplicate names. | Architecture Failure: The MDM system failed to identify and flag the high probability match between "ACME Corporation" and "ACME Corp, Inc." for merging. |

| 4. Timeliness | Late Inventory Update (Missed opportunity to sell stock) | Policy Failure: The Data Owner fails to define a Service Level Agreement (SLA) mandating that physical stock updates must be reflected within 5 minutes. | Manual Capture: A warehouse worker must manually enter stock counts into the system at the end of the shift, delaying the inventory update by 8 hours. | System Failure: The Analytical system is configured for a 24-hour batch refresh, delaying the consumption of already-entered inventory data. |

| 5. Validity | Invalid Material Grade (Production system rejects input) | Policy Failure: The Engineering team failed to formalize the new list of valid Material Grade codes (A-F), so the old codes are still in the documentation. | Input Failure: The source system is not configured with a range check, allowing the user to enter "G" (an invalid code) into the Material Grade field. | Minimal/N/A: Validity is an immediate input block. The system either rejects the input immediately or, if it passes, the error is difficult to fix downstream. |

| 6. Uniqueness | Duplicate Product Record (Inventory counted twice) | Policy Failure: The Governance body fails to define the business rules for how to match and merge two identical product records (e.g., what percentage of attribute similarity constitutes a match). | Process Failure: A user creates a second Product ID for an existing item because the system's search functionality is poor or confusing. | Architecture Failure: The MDM system fails to enforce the single, universal product key, allowing two unique internal IDs to represent the same physical product in the analytical layer. |

V. How to efficiently fix data quality issues?

To solve data quality issues for all data is an impossible proposition. So, organizations often prioritize data fields to improve quality on. An effort along the three aspects below typically gets organization quite far in improving their data quality:

Three High-Leverage Solutions

Start with the Source

Immediately implement Operational Automation to fix Validity and Timeliness, ensuring the system receives clean data quickly.

Establish the Rules

Implement Governance to define Accuracy and Completeness, giving technical teams the required standards.

Unify the Architecture

Implement MDM and Transformation Controls to fix Consistency and Uniqueness, enabling the system to deliver One Source of Truth for strategic decision-making.

In the next article, we will focus especially on how you can use AI to improve data quality. AI-powered solutions can automate data extraction from unstructured sources, validate data at entry points, and intelligently match and merge duplicate records—addressing root causes across all three categories.