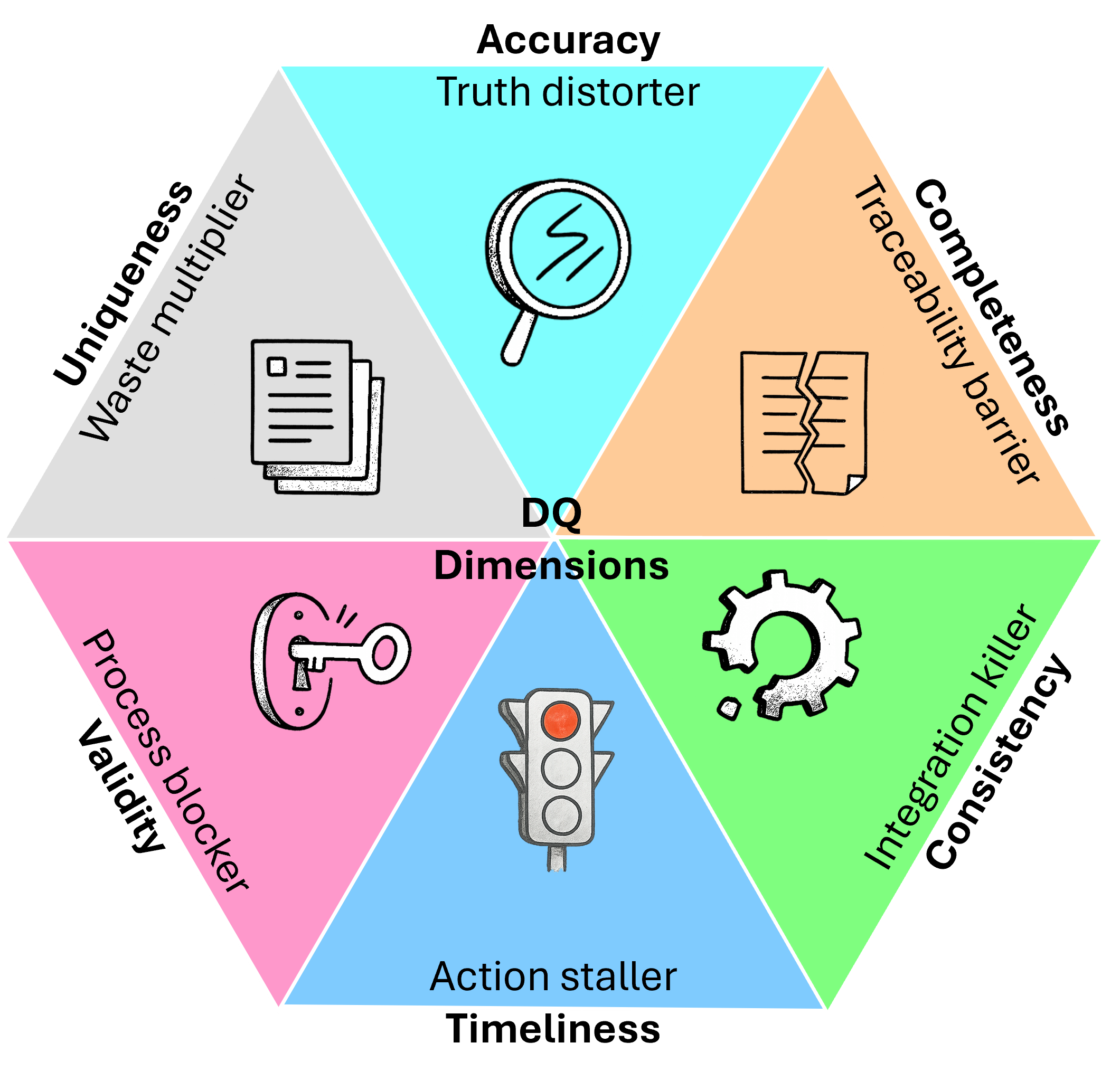

Data Quality (DQ) is the measure of data's suitability for its intended purpose—its fitness for use. Although data quality can be measured along many dimensions, typically Accuracy, Completeness, Consistency, Timeliness, Validity, and Uniqueness are considered to be the most important dimensions. Focused data quality improvements on these dimensions are critical to improve the speed and reliability of operational and strategic business decisions.

What Are the Foundational Dimensions of Data Quality?

At its core, data quality (DQ) is about fitness for purpose: the degree to which data supports the task or decision it's used for. Academic and industry bodies, including the Data Management Association (DAMA-DMBOK), have defined several dozen dimensions used to assess data quality. These dimensions address everything from the Granularity (level of detail) to Recoverability (ability to restore data) and Reputation (trust in data) - DAMA-NL Research Paper. But improving quality on such a broad set of dimensions is neither practical nor needed.

The Consensus: Distilling to the core six Data Quality dimensions

While the universe of DQ dimensions is large, the literature converges on the below six foundational dimensions that provide a robust and widely accepted data quality coverage in practice. The table below provides a summary of each dimension and business rationale on its importance.

| Dimension | Relevant data quality question | Dimension impact |

|---|---|---|

| 1. Accuracy | Does the data correctly represent the real-world object? | Truth distorter, e.g., faulty sensor reading in a production plant |

| 2. Completeness | Are all necessary fields populated? | Traceability barrier, e.g., incomplete data makes root cause analysis impossible |

| 3. Consistency | Does the data match across records and different systems? | Integration killer, e.g., creates more complexity in data analysis |

| 4. Timeliness | Is the data available when needed? | Action staller, e.g., cannot make process changes in sync with external changes |

| 5. Validity | Does the data conform to the required formats, standards, and constraints? | Process blocker, e.g., often leads to investigation and revisiting the data to correct it |

| 6. Uniqueness | Does each record represent a distinct entity? | Waste multiplier, e.g., creates inefficiencies in working with data |

Data quality issues examples in Manufacturing, Procurement, and Innovation domains

The tables below provide specific examples of data quality issues and how these create significant business problems across Manufacturing, Procurement, and Innovation domains. I have chosen to focus on Manufacturing and Procurement in Supply Chain but it equally applies to other areas such as Quality, Regulatory, Planning, Warehousing, Logistics etc.

Table 1: Data Quality Failures Impacting Manufacturing Operations

In manufacturing, data integrity is critical for product quality, regulatory compliance, and maximizing output. Failures here directly translate to scrap, rework, and costly downtime.

| Dimension | Manufacturing data quality issue example | Business impact |

|---|---|---|

| Accuracy | A temperature sensor calibration record is off by 5°C, causing automated process control to run at an incorrect setting. | Leads to incorrect process control, resulting in product defects or quality deviations that increase scrap. |

| Completeness | The mandatory field for recording the batch pressure release time is frequently left blank in the MES system. | Breaks the traceability chain, making it impossible to perform a root cause analysis during a product recall or audit. |

| Consistency | The part ID for a specific valve is labeled "VLV-100A" in ERP master data, but "isolation valve, 10B" in local MES. | Prevents Master Data Management and causes maintenance teams to order the wrong part, leading to unexpected downtime. |

| Timeliness | Data on machine utilization is batched and uploaded every 12 hours instead of streaming in real-time. | Prevents real-time alerting and automated process adjustments, leading to inefficient energy consumption or excessive cycle time. |

| Validity | An operator manually enters "850" into a system field requiring the temperature to be between 100°C and 400°C. | If unflagged, this extreme value can corrupt statistical models and trigger false alarms or incorrect automated interventions. |

| Uniqueness | The Master Data system contains two unique part numbers for the exact same raw material from different legacy system migrations. | Leads to inflated inventory counts and over-ordering of materials, resulting in excessive capital being tied up in storage. |

Table 2: Data Quality Failures Impacting Procurement

For procurement, data quality is the backbone of spend analysis, supplier risk mitigation, and achieving contractual savings. Failures directly result in financial loss.

| Dimension | Procurement data quality issue example | Business impact |

|---|---|---|

| Accuracy | A supplier's quoted unit price is incorrectly entered into the ERP by 15% due to a manual transcription error. | Causes an inaccurate Total Cost of Ownership (TCO) calculation, skewing strategic sourcing decisions. |

| Completeness | Supplier contracts are scanned and uploaded as PDFs, but the end date and renewal terms are never entered into the structured contract management system. | Results in missed renewal deadlines and the automatic rollover to higher rates (Maverick Spend). |

| Consistency | Two different regional ERP systems use different terminology for the same commodity (e.g., "MRO Fastener" vs. "Hardware Bolt"). | Makes global spend analysis impossible, preventing volume consolidation for critical bulk discounts. |

| Timeliness | The change in a supplier's compliance status (e.g., environmental certification expired) is not updated in the system until 90 days after expiration. | Exposes the company to regulatory risk by unknowingly ordering from a non-compliant or expired vendor. |

| Validity | A user enters a Purchase Order (PO) number that does not match the required nine-digit numerical format. | The PO fails upstream processing, requiring manual intervention and rework by the finance team to reconcile the transaction. |

| Uniqueness | A single vendor ("CV Corp") has three separate vendor IDs created in the system due to slight naming variations or lack of consolidation. | Destroys supplier leverage by fragmenting spend and risks duplicate payments. |

Table 3: Data Quality Failures Impacting Innovation (R&D)

In innovation, quality data dictates the speed of discovery, the reliability of experimental results, and the ability to effectively leverage Intellectual Property (IP) for product velocity.

| Dimension | Innovation data quality issue example | Business impact |

|---|---|---|

| Accuracy | A laboratory equipment log incorrectly records the concentration of a proprietary catalyst used in a synthesis trial. | Renders the experimental results unreliable, leading to flawed conclusions and wasted subsequent trials based on bad data. |

| Completeness | An engineer's final design document is submitted without the accompanying Bill of Materials (BOM) or materials grade sheet attached. | Stalls the production handoff as procurement cannot order parts, delaying time-to-market and increasing costs. |

| Consistency | Testing methodology ("Tensile Test") is documented using different standard identifiers across two separate R&D labs. | Data from different labs cannot be automatically compared or aggregated, undermining portfolio analysis and reuse. |

| Timeliness | A new process optimization result is ready but is delayed by three weeks while waiting for manual sign-off and entry into the knowledge system. | The R&D team cannot quickly adjust running experiments, wasting valuable time and accelerating data decay. |

| Validity | A required measurement is entered as a non-standard abbreviation (e.g., "Hi Temp" instead of a numerical value) into the database. | Prevents data from being queried or used in comparison functions, making the data unusable for analysis. |

| Uniqueness | A new compound is given a temporary identifier, but a chemist later assigns the same identifier to a completely different compound. | Corrupts the knowledge base permanently, risking cross-contamination of experimental data and incorrect conclusions. |

The Strategic and Operational Cost of Poor Data Quality

Gartner estimated that the average cost of poor-quality runs in millions for organizations (Gartner Data Quality). But even from the examples above, it is clear that poor data quality creates significant challenges in operating your business in different ways.

1. Impairs operational decision-making ability

- • Creates False Reality: Accuracy failures (Conclusion Corruption) in manufacturing (e.g., faulty sensor data) or procurement (e.g., incorrect unit prices) cause teams and machines to execute flawed commands, resulting in product defects, scrap, or massive miscalculations of Total Cost of Ownership (TCO).

- • Physical and Safety Incidents: Inaccurate production data is the direct cause of poor process control, leading to high-risk physical safety incidents and quality incidents in Manufacturing.

- • Breaks Core Transactions: Validity failures (Transaction Breaker) mean the system rejects critical data (e.g., Purchase Orders, production logs) because it is structurally malformed, forcing the immediate halt of a process flow.

- • Corrupts Automation: Accuracy errors feed incorrect information into AI workloads, Industry 4.0, and advanced analytics, causing automated models to generate unreliable predictions or execute flawed operational adjustments.

2. Slows you down to correct actions or repair data

- • The Action Staller Effect: Timeliness failures (Action Staller) mean data is delivered too late (stale), preventing real-time control and adjustments, leading to inefficient energy consumption or prolonged cycle times.

- • Operational Lag and Unplanned Downtime: Consistency failures (Integration Killer) prevent the correct linking of operational data (e.g., MES part tag) to master data (ERP part ID), leading to technicians ordering the wrong part and causing extended unplanned downtime.

- • Data Rework: Failures force highly paid employees into time-consuming manual interventions to cleanse records, validate information, and reconcile data between systems.

3. Limits strategic decision-making ability

- • Breaks Master Data Governance: Consistency and Uniqueness failures (Waste Multiplier) prevent the creation of a Single Source of Truth, which is essential for global spend analysis or consolidated inventory reporting, destroying financial leverage.

- • Increases Financial and Inventory Waste: Uniqueness errors lead to Maverick Spend in procurement and Inventory Inflation in manufacturing, tying up excessive capital due to flawed visibility.

- • Blocks Audits and Compliance: Completeness failures (Audit Barrier) prevent the company from providing required audit trails or verifying supplier compliance status, leading to regulatory fines and legal exposure.

- • Stifles Innovation and Growth: Completeness failures in R&D (e.g., missing Bills of Material, non-standardized methods) directly delay the handoff of new products, delaying time-to-market and eroding competitive advantage.

4. But even beyond that bad quality data has a hidden human cost. Poor quality data often creates

- • Low Data Trust: Analysts and managers routinely spend time double-checking reports and calculations because they do not trust the underlying data's accuracy or consistency.

- • Last-Minute Crunch: Data issues only surface when hitting a critical deadline (e.g., month-end close or a regulatory audit), forcing teams into panicked, costly, and often inaccurate clean-up efforts.

- • Frustration and Burnout: The constant need for tedious manual work and firefighting due to system failures leads to widespread frustration and burnout among data stewards and domain experts.

Through focused cost-effective data quality improvements, organizations can bring speed and robustness in their decision making them more agile and productive. But before we look at improving data quality, let us first understand the root causes of poor data quality in the next article in data quality trilogy.